Beyond Language Models: Specialized Foundation Models and National Security

Robotics foundation models, manufacturing foundation models, large circuit models, large physics models, geospatial foundation models, and more!

At this point, most of us are familiar with large language models (LLMs) like GPT-4, the generative AI model that powers ChatGPT. We are comfortable interacting with an LLM via natural language text, “chatting” with the model the same way we might text with a friend.

Over the past year, researchers and startups alike have advanced generative AI technology beyond general text-based LLMs and begun to develop specialized foundation models, foundation models (FMs) custom trained on specific domain data and data types. As I’ve highlighted in past posts, general text based LLMs like GPT-4 are already having an effect on national security. However, specialized foundation models will be crucial to fully unlock generative AI’s potential to impact national security. Specialized foundation models are changing the way we approach robotics, geospatial intelligence analytics, manufacturing, engineering, material science, physics, math, biology, autonomous vehicles, and more.

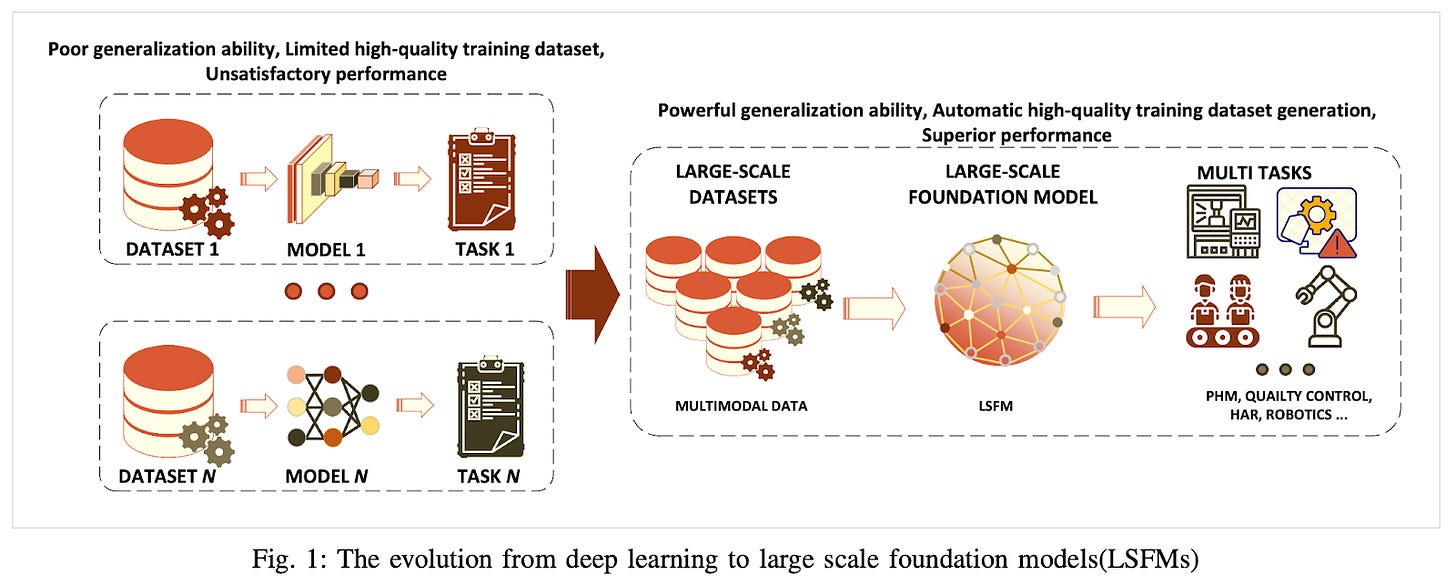

First off, what exactly does the term “foundation model” mean? And what does it mean for a foundation model to be specialized? Broadly speaking, FMs are transformer based deep learning models that have been trained on large quantities of data and have large parameter sizes (100 million or more parameters). FMs are differentiated from other kinds of machine and deep learning models by their large size which allows them to generalize, conduct multiple kinds of tasks, and perform well in novel scenarios, even if those scenarios were not included in their training data, with zero-shot or few-shot learning. In contrast, traditional deep learning models like convolutional neural networks (CNNs) tend to be good at handling very specific tasks they learn from their training data, but are not good at generalizing beyond their training data or conducting multiple tasks (ex: a CNN used for computer vision might be good at identifying a specific kind of Navy ship like a frigate from satellite imagery, but breaks down when trying to identify a different kind of ship like a destroyer).

A general purpose text-based FM like GPT-4 is good at generating basic text and bantering back and forth with a human about basic topics. However, GPT-4 is not good at handling multimodal data beyond text and cannot handle expert topics or expert tasks. General purpose FMs are trained on broad swaths of text and image data from the Internet and generate basic text and image data whereas a specialized FM is a model trained on a specific kind of data relevant to specific tasks and can generate responses that require specialized expertise. FMs work to predict the next token of data in a sequence – in the case of LLMs, those tokens represent text data, however, tokens can represent any kind of data, be it image, audio, LiDAR point clouds, robotics control policies, or otherwise.

One of the most active areas of research (and startup funding!) is in robotics foundation models.1 Startups like Cobot2, Covariant, and Skild have all raised massive fundraising rounds to build robots and robotics foundation models that promise to finally unlock general purpose robotics. Today, most robots are not highly generalizable. Most work only in specific environments carrying out specific tasks (ex: a robot arm may be programmed to pick and place a specific kind of object in a specific assembly line). A robotics FM could enable a robot to carry out any arbitrary task in any arbitrary setting, even if that task was not present in its training data.

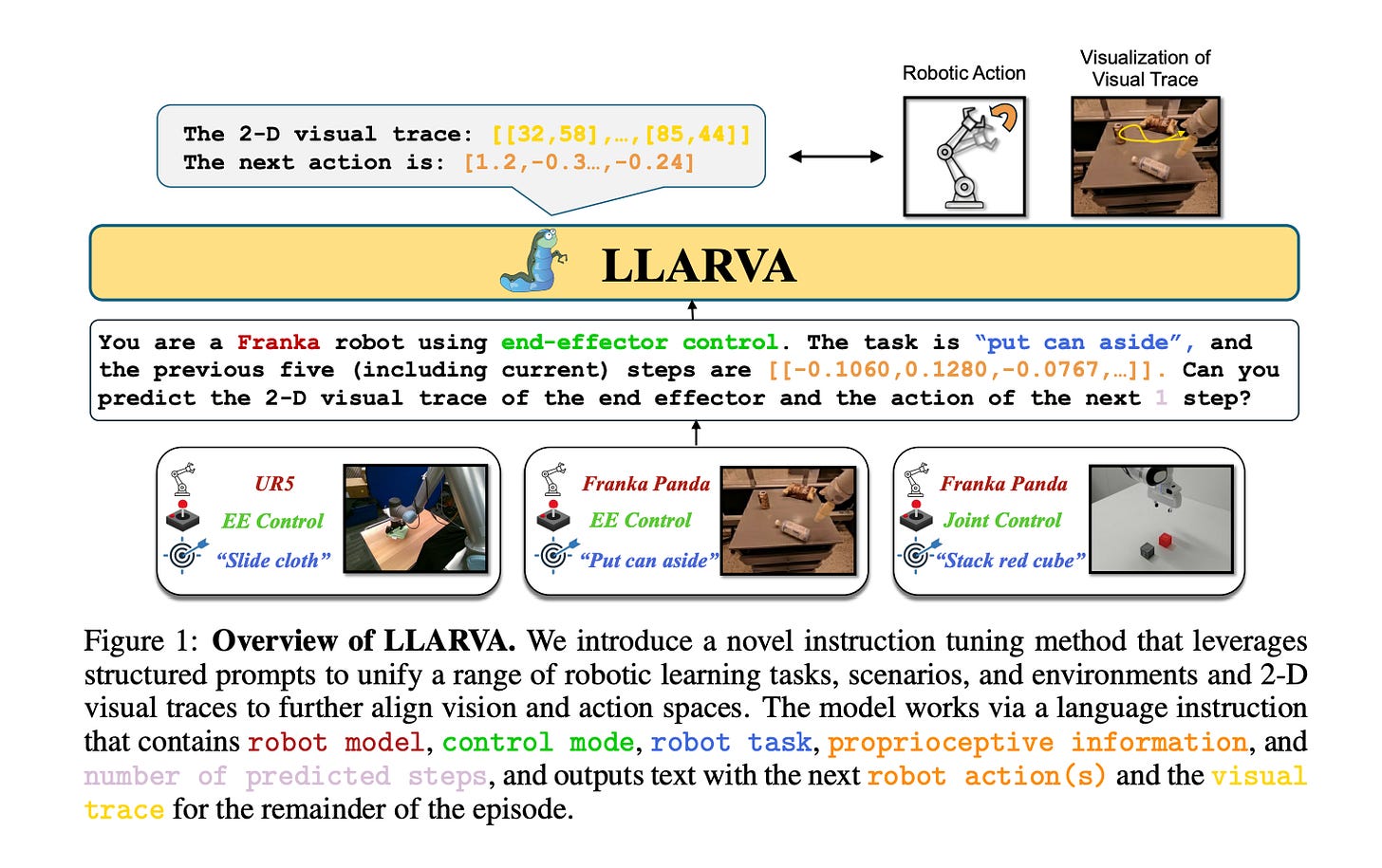

Most work on robotics foundation models focuses on “Vision Language Action” (VLA) models applied to robotics use cases (ex: see OpenVLA, Octo, RT-X, and LLARVA). VLA models take in vision and language data as an input and can generate robotics control policies that task a robot with a particular action. Researchers train robotics foundation model on large multimodal datasets that include natural language text descriptions of a task combined with a series of image frames (normally RGB but sometimes with depth or LiDAR) of a robot carrying out that task combined with the current state of the robotic joints and sensor readings.

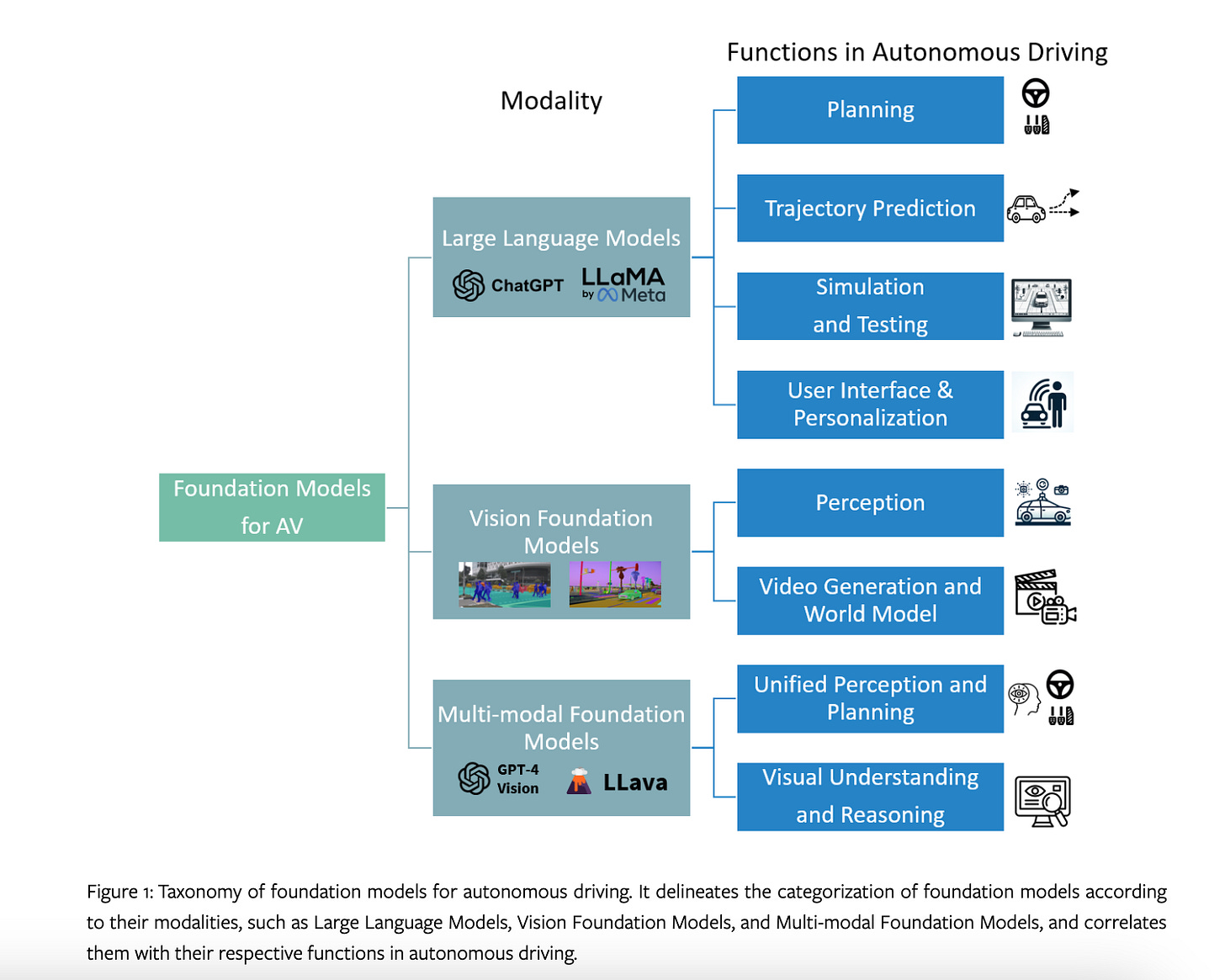

In a similar vein, researchers are also developing specialized foundation models for autonomous vehicles which are based on several modalities including text, audio, video, LiDAR point clouds, and vehicle control signals in order to carry out different autonomous vehicle tasks including perception, visual understanding, and planning. For instance, autonomous vehicle startup Waabi recently released their first foundation model Copilot4D that is explicitly focused on simulating 3D space for autonomous vehicle navigation. Rather than traditional text data, Copilot4D is trained on LiDAR data, a kind of 3D sensor data commonly used on autonomous vehicles, and is able to predict the future state of 3D space by generating a future LiDAR point cloud. Similarly, autonomous driving startup Wayve has developed its own foundation model to generate realistic driving videos. Wayve’s world model uses camera images, text descriptions, and vehicle control signals as input tokens and predicts the next frame. Other research focuses on using LLMs fine tuned on traffic data to inform autonomous driving decisions.

Another form of specialized foundation model that will undoubtedly impact national security are “geospatial foundation models.” Several groups (including NASA) are developing geospatial FMs that understand geospatial data (satellite and aerial imagery). Geospatial FMs are trained to understand imagery captured with several different kinds of sensors, resolution, and noise levels including infrared, hyperspectral, electro-optical, and more. The DoD and intelligence community rely heavily on geospatial intelligence to understand our adversaries’ actions (in fact, there is a whole intelligence agency, the National Geospatial Agency, solely dedicated to collecting and analyzing geospatial intelligence). Today analysts spend significant time combing through geospatial data looking for relevant information. Even the DoD’s AI-enabled geospatial analysis systems, like those developed by Project Maven, still require significant manual effort to label geospatial data in order to train computer vision algorithms. In the future, geospatial FMs will eliminate the need to manually label and comb through geospatial data, as the FMs themselves will understand the data and be able to answer analysts’ questions, identify relevant entities and anomalies, and more.

Not only will specialized FMs change the way the national security community analyzes intelligence, they will also strengthen the US and allied industrial base. Companies like Arena AI are developing manufacturing foundation models which are trained on manufacturing data (ex: time series sensor data coming from manufacturing equipment, visual data of hardware being manufactured, etc) and able to assist manufacturers with tasks like predictive maintenance, identifying manufacturing anomalies and hardware defects, optimizing equipment settings, and improving yields.

Similarly, Arena AI and other startups are developing FMs for electronics (semiconductors, circuit boards, etc) design and testing (ex: one research paper outlines its approach to “large circuit models”). These specialized foundation models are trained on semiconductor and circuit design documents and specifications and are able to produce net new electronics design as well as test and detect errors in human created designs. Further, researchers and startups like Hanomi are also exploring the potential to create 3D hardware design foundation models trained on natural language text and CAD file data that could automatically generate 3D CAD design files based on a natural language description of a hardware system.

Some researchers are developing FMs that understand the world on a deep scientific level. For instance, researchers and startups are developing large physics models, biology FMs, mathematical FMs, astronomy FMs, and material science FMs. General purpose LLMs like GPT-4 famously struggle to reason about mathematics, science, and the physical world. Large physics and mathematics models, for example, could be used for a wide range of tasks relevant to national security including hardware engineering design, software engineering, simulation, and prediction.

One startup I met (still in stealth) is training a foundation model on decades of weather data in order to learn the physics behind weather to better forecast future weather patterns (their ultimate goal is to train a general purpose large physics model that understands the physical world more broadly). Having hyper accurate weather forecasts is vital for national security, as national security organizations need to maximize operational readiness, protect against high-impact weather threats to infrastructure, facilities, equipment, and personnel, and enhance operational decision making.

Similarly, Vlad Tenev (the former CEO and founder of Robinhood) recently founded a new startup Harmonic which is developing foundation models for mathematical superintelligence. As Harmonic’s website outlines, specialized mathematical FMs will revolutionize engineering, science, finance, and more, leading to scientific and mathematical breakthroughs, improved aerospace and chip design engineering, better understanding of financial markets, improved software engineering, and much more. Who knows what kind of scientific breakthroughs these specialized scientific models will produce? New scientific breakthroughs may completely upend the national security paradigm – these models could help produce quantum computers, the next generation of synthetic biology, new approaches to energy, new materials that benefit warfighters, and more.

Specialized FM development is still in its early days, and there are a number of challenges researchers face when developing these models. First and foremost, many specialized FM developers lack enough specialized data to train large, high quality, generalizable FMs. The benefit of general purpose text and vision FMs like GPT-4 and LLaMA is that the Internet contains zetabytes of general purpose text and visual data. However, the same cannot be said of many specific data types and specific task-related data needed for some specialized FMs. For example, in the case of robotics, the data needed to train robotics FMs needs to be manually created by recording humans tele operating physical robots. This kind of data is much more expensive to create than general text data. To get around this data problem, some researchers are experimenting with training specialized FMs on synthetic data (fake data created in simulation or generated by a larger general purpose foundation model), however, some experts worry that overreliance on synthetic data can lead to poor model performance.

Undoubtedly, incumbents with access to proprietary specialized data (especially data that requires specific hardware to produce like robots, autonomous vehicles, or satellites) will have an edge in specialized FM development. Those seeking to develop specialized FMs will need to find partners with the kind of data they need to train their FMs. Already, I’ve met several specialized FM startups who are working to establish creative partnerships with existing data providers (particularly in the robotics space).

Training a new foundation model can also be expensive which means

As I’ve previously written about, the national security community is sitting on troves of specialized data that does not exist elsewhere. The promise of specialized FMs emphasizes the importance of the DoD’s data extraction and data quality initiatives like those spearheaded by the Chief Digital and AI Office (CDAO). It is essential for the DoD and intelligence community to extract and organize their data in a way that allows for the creation of specialized FMs for national security use cases. Specialized FMs will usher in a new era of robotics, scientific discovery, engineering, manufacturing, intelligence analysis, and more. As these models are perfected, the US national security community must be proactive in adopting this technology to improve our ability to deter our adversaries and protect democracy around the world.

As always, please reach out if you or anyone you know is building AI-enabled products at the intersection of national security and commercial technologies! And please let me know your thoughts – I know this is a quickly changing technology space and welcome any and all feedback. Where else are there opportunities for advancements in specialized foundation models to revolutionize national security? How else can the US DoD and intelligence community best advance the adoption of AI?

Note: The opinions and views expressed in this article are solely my own and do not reflect the views, policies, or position of my employer or any other organization or individual with which I am affiliated.

For more on how robotics and autonomous vehicles will impact national security, I recommend reading Army of None: Autonomous Weapons and the Future of War by Paul Scharre and The Kill Chain by Christian Brose.

For more on robotics FMs, I HIGHLY recommend reading this blog post from Cobot about robotics FMs and other ML approaches to improving robotics: https://medium.com/@mjvogelsong/unlocking-the-future-of-robotic-intelligence-991e151bffe9.