Silicon Valley’s current favorite buzzwords are “AI agents.” Venture capitalists and founders alike have published numerous op-eds1 painting an optimistic picture of a future in which “agentic” artificial intelligence (AI) models are able to do everything from scheduling meetings to purchasing airline tickets to tasking autonomous drones to planning and ordering a grocery list. But what exactly is an AI agent? And how will they affect national security?

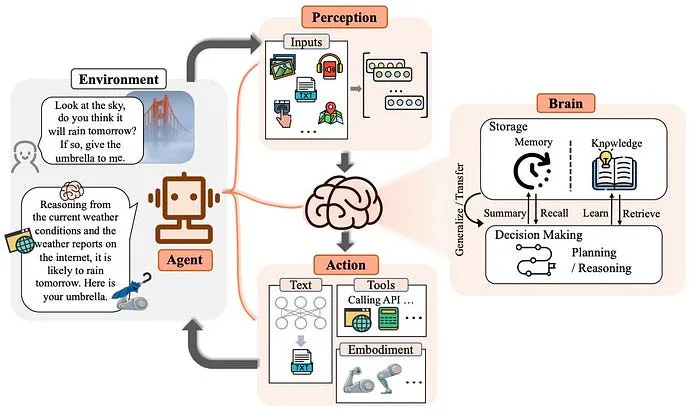

The term “AI agent” is not particularly well defined. Broadly speaking, an AI agent is a generative AI model (like a large language model – LLM) that is able to use “tools” (ex: Google search, a calculator, an API call, etc) in order to autonomously perform tasks. AI agent technology moves beyond the simple Q&A ChatGPT-like “chat bot” experience that many are familiar with. The actions an AI agent takes can be much more complex than simply generating text or an image – they can call APIs, interact with third party applications, fill out forms, respond to emails, and more. Often, agents do not need to be prompted by an end user – they may be triggered autonomously, and many end users may not know an AI agent is acting at all.

One commonly used example of an AI agent is a LLM that can plan a trip on behalf of a user. The user describes the trip they wish to take (ex: “Plan a trip to Croatia between July 15 and July 25”), and an Internet-connected LLM is able to use tools to book appropriate flights, a rental car, and a hotel room for the user and generate a day-by-day itinerary for the trip. A more complex agentic system may make use of several specialized agentic models that all have access to different tools (ex: there may be one agentic model that is good at writing and executing database queries, one that can write and execute Python code, another that is good at parsing complicated text, and yet another that can interpret images).

In order to interact with a third party system, an AI agent may have access to a set of APIs it can call (ex: in the trip planning example, the AI agent can call United’s API to book a flight, Hertz’s API to book a rental car, and Marriot’s API to book a hotel room). Or, an AI agent may use “robotics processing automation” (RPA) technology to interact with a third party application with limited API access. RPA uses “software robots” to automate repetitive, rules-based digital workflows. RPA enables users to hard code specific workflows where the software RPA “robot” is able to click, type, and interact with an application the same way a human does. It is particularly powerful for organizations (like the DoD) that rely on legacy software systems that lack robust APIs2 and other modern integration tooling. Today, humans need to hardcode RPA workflows, however, in the future, AI agents will be able to learn common workflows from human users and automatically replicate those workflows, without the need for hard coded steps. In 2021, the DoD created an RPA working group and is actively exploring and deploying RPA use cases in their legacy tech stack. Currently, the DoD does not use any AI in their RPA workflows, but they hope to in the future.

Agentic technology is still in its early days and remains imperfect. However, already there are some powerful ways it can be used to bolster national security. In this post, I’ll cover five initial segments in which AI agents can have a large impact on national security: 1. cybersecurity, 2. logistics & supply chain management, 3. intelligence analysis, 4. AI-enabled operating systems, and 5. autonomous vehicles & robotics.

1. Cybersecurity

One of the most powerful use cases for AI agents in the short term is for cybersecurity. AI agents will help write secure code, patch vulnerable code, conduct penetration testing & red teaming, assist incident response and SOC analysts, and improve cyber threat intelligence.

First, as I wrote about in a previous post, AI agents are already very good at generating code. Over the past year, a number of companies (including Semgrep and Github’s CodeQL) have released products powered by AI agents that are able to use traditional vulnerability detection tools in order to surface vulnerabilities and then employ code generation models to generate secure code to patch those vulnerabilities.

Second, several startups, including Staris AI and RunSybil, are using agentic systems to conduct penetration testing and red teaming. Agentic penetration testing systems use a number of tools (ex: attack surface management, API scanning tools, etc) to find and remediate vulnerabilities in software systems. The LLM may then try to execute an attack based on that vulnerability. For example, one startup I spoke with had an agent that was able to identify, write, and execute a SQL injection in a customer’s application. Another developed an agent that could autonomously and intelligently interact with applications to probe for vulnerabilities – for instance, rather than merely using a list of common usernames and passwords to attempt to crack a login system, an agent may search the Internet to find the default username and password for a particular kind of application instead of relying on a bruteforce method. Generative AI can also help generate penetration test reports, saving penetration testers hours of tedious, manual work.

Third, security operations center (SOC) and incident response (IR) analysts are infamously overworked and overwhelmed by security alerts (many of which are false positives) generated by their plethora of cybersecurity tools. In the past, security leaders have tried to improve the efficiency of SOC and IR analysts through the use of tools like SOARs,3 however, many of these automation initiations have not lived up to expectations.

A number of startups have emerged leveraging AI agents to support SOC and IR analysts including Dropzone AI, Alaris Security, Radiant Security, Culminate Security, Arcanna AI, and Wraithwatch. Rather than older automation solutions like SOARs which require analysts to hard code workflows, agents are able to actively learn and generalize common workflows from human analysts. SOC analysts can use AI agents to collect relevant contextual data (ex: log data, employee emails, NDR / EDR / XDR data, etc) relating to an incident and produce an incident report. AI agents can analyze this data to try and identify root causes of an incident and generate an incident report with all of this information. Notably, the DoD (specifically the DoD’s Chief Digital and AI Office – CDAO) is already experimenting with the use of LLMs to assist SOC analyst report generation.

Further, an AI agent can run tests to determine the nature and severity of a SOC alert. If an alert is confirmed to be malicious, an agent could even take actions within other applications to mitigate the scope of the alert (ex: restrict user permissions for a compromised account). Additionally, AI agents can dynamically generate incident response playbooks for analysts to follow based on the specifics of a particular incident.

Finally, there is a tremendous opportunity for AI agents to disrupt the cyber threat intelligence (CTI) market. Today, CTI incumbents like Recorded Future are excellent at collecting relevant information on emerging cyber and fraud threats from the dark web, deep web, and other sources. However, analysts still need to wade through troves of data to extract meaningful insights. Startups like Overwatch Data have emerged to build AI-native CTI tools that leverage advances in AI agent technology. AI agents can improve both the collection and analysis of CTI. For example, AI agents could be used to automate the operation of “sockpuppet” social media accounts, ingesting a site’s context, learning from other users’ behavior, and interacting with the platform the way a real human user would.4

Once data is collected, AI agents can help extract and summarize insights by querying multiple data streams to enrich information to present analysts a more complete picture of threat actors. AI agents can automatically generate CTI reports and can even take proactive measures to prevent an attack from happening – for example, if they detect a trove of breached login information, the agent could automatically disable or minimize privileges of each leaked account to prevent a major incident. Similarly, AI agents can ingest cyber threat intelligence reports and automatically generate and deploy threat detection rules to detect new vulnerabilities.

2. Logistics and Supply Chain

As WWI US Army General John Pershing once said, “Infantry wins battles, logistics wins wars.” The DoD runs one of the most complicated logistics and supply chain operations in the world – coordinating equipment (including some of the most dangerous and expensive equipment) and resources for millions of people across thousands of global bases. Today, most logistics and supply chain management workflows (in both the commercial and government spaces) are remarkably manual. Humans manually track assets, place orders via phone, email, and WhatsApp, forecast demand, and draft supply plans. One of the primary tools logistics and supply chain managers rely on are Enterprise Resource Planning (ERP) tools, which tend to be expensive, clunky, legacy systems.

The DoD has six major ERP systems – one for each service (Coast Guard, Army, Navy, Air Force, and Marines) and one managed by the Defense Logistics Agency (DLA) – and more than 1000 other tools it uses for logistics-related tasks like demand forecasting. However, most of these tools are not interoperable with other tools and lack data analytics functionality, leading to many wasted hours and limiting their usefulness.

In the short term, AI agents will make existing logistics tools more interoperable and easier to use. A basic use case of AI agents is to use agents to copy data from one ERP system to another. There are reports of logisticians spending hours manually copying information from one system to another because the systems do not have integrations – an AI agent could do this work instead of a human, using AI-enabled RPA to interact with systems that lack APIs. DLA and DoD as a whole are actively exploring ways traditional RPA can improve their use of existing manual interfaces to bridge ERP (legacy) systems. In the future, these traditional RPA workflows can be expanded and improved using AI.

An AI agent could also automate supply chain and logistics data entry based on natural language communications. Often, orders are placed and logistics are coordinated via email or phone call. An AI agent could automatically populate a supply chain management tool with information from an email or other form of communication, saving time and ensuring that all relevant information is documented in a standard form. In the future, an AI agent could also automatically dispatch orders, optimizing supply mechanisms to ensure resources get to end users as quickly as possible. For instance, one startup, Didero AI, uses AI agents to help organizations manage their supply chains by identifying new and alternative vendors, coordinating communications with vendors, and monitoring supply chain disruptions.

It is highly likely that future conflicts in regions like the Indo-Pacific will lead to tremendous supply chain disruptions. AI agents can help logisticians manage their supply chains even in the face of a contested logistics environment. An AI agent can quickly identify potential supply chain disruptions by ingesting natural language news sources, third party data sources, and reports; highlight parts of a specific users’ supply chain that will be affected by the disruptions; and potentially even suggest ways to get around the supply chain disruptions (ex: alternative vendors for disrupted parts, alternative supply routes, etc).

Already, the DoD has recognized the potential for generative AI to improve logistics. CDAO is actively experimenting with using generative AI for logistics. In March 2024, CDAO’s 9th GIDE exercise experimented with using GenAI to draft supply plans.

Finally, AI agents will help improve national security logistics and supply chains by improving the US and allied manufacturing base itself. AI agents can be used for manufacturing optimization and predictive maintenance, ingesting information from sensors on the manufacturing line and automatically re-configuring manufacturing equipment to improve processes. Agents can also be used to improve designs of critical components like semiconductors. For example, Hanomi AI uses AI agents to improve CAD design of hardware systems. Arena AI uses transformer models to improve manufacturing processes. Several startups (most still in stealth) are training AI agents to test, develop, and optimize semiconductor designs.

3. Data Analytics / Intel Analysis

Another clear use case for AI agents is in assisting intelligence analysts across the DoD and intelligence community (IC). Intelligence analysts spend most of their time ingesting natural language and structured information and producing natural language intelligence reports based on that information. Companies like Vannevar Labs, Primer AI, and Palantir represent the first generation of AI-enabled intelligence analysis platforms. However, AI agents represent a new generation of technology that will improve the efficiency and effectiveness of intelligence analysts.

At the most basic level, intelligence analysts can use generative AI agents to ask natural language questions about data sources. For example, Danti AI lets intelligence analysts ask natural language questions about geospatial and time stamped data. Similarly both Palantir and Scale AI have built AI-enabled knowledge management systems (Palantir AIP and Scale Donnovan respectively) that allow national security analysts to query large data stores.

AI coding agents will also help intelligence analysts more quickly analyze structured data – agents can generate complex search queries and data analytics scripts in languages like Python or R based on natural language prompts.

Finally, AI agents can be used to write full intelligence reports based on relevant data and notes provided by intelligence analysts. The US national security community is still early in experimenting with these capabilities, but already I’ve met folks working on AI agent-enabled platforms to tackle intelligence report generation tasks like using generative AI to generate the President’s Daily Brief.

4. AI Agent OS

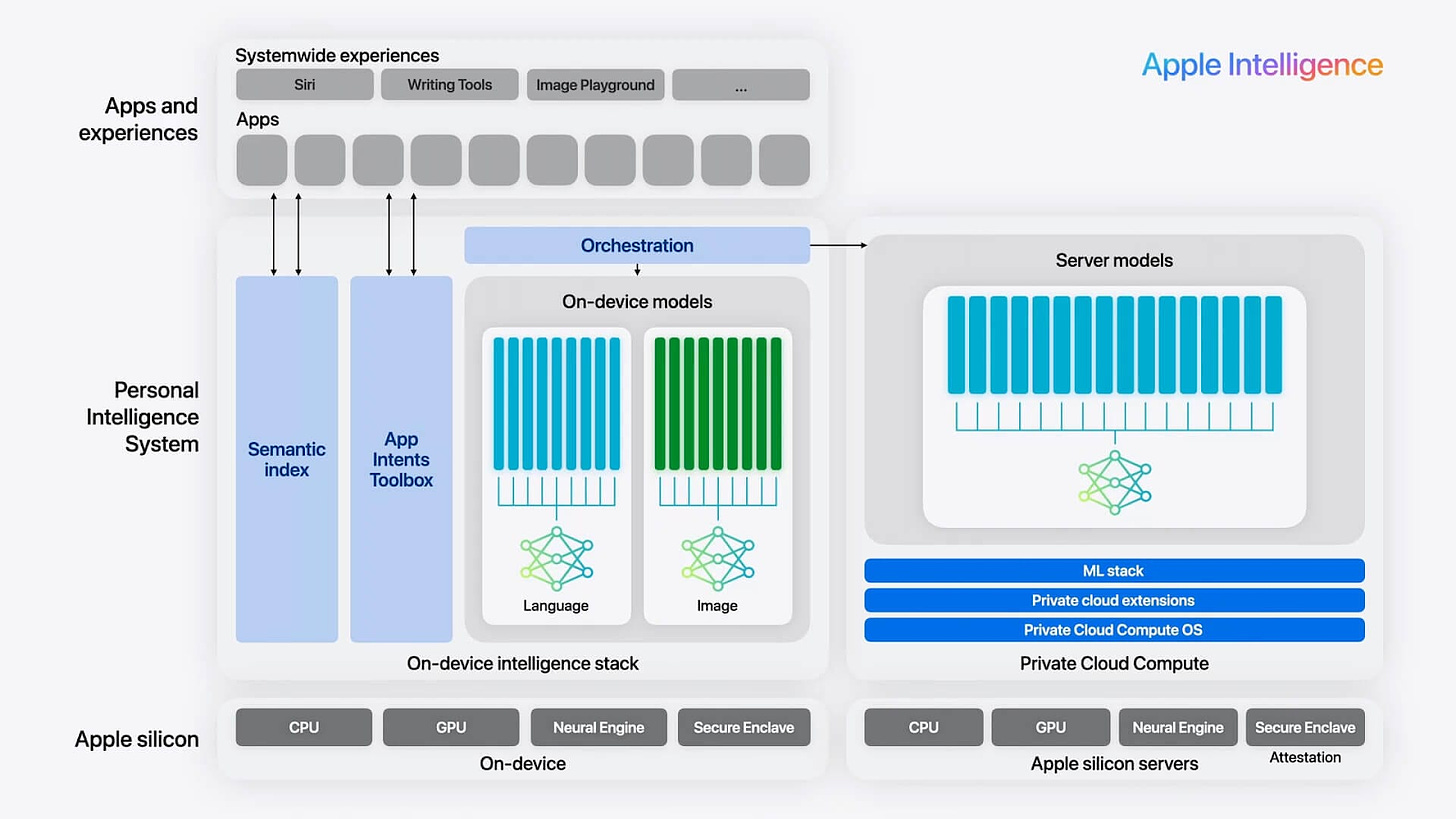

Not only will AI agents help with specific tasks like logistics management and cybersecurity, AI agents built directing into devices’ operating systems (OSes) will also be able to orchestrate tasks across multiple applications on a device. Implementing AI agents at the OS layer allows agents to build context across many app interactions. Most third party apps are “sandboxed” from each other, so they cannot learn from the data and user actions that occur across multiple applications. However an OS-layer AI agent can gather context from all user interactions, apps, and data stores, allowing it to make highly informed decisions and actions.

OS-layer AI agents also have a seemingly “infinite action space.” In the future, an OS-layer agent would be able to do anything on a device that a human user can do, as it is able to interact with all applications available to a user. Agents will be able to use all the interfaces available to humans – virtual keyboards, touchscreens, mouse movements, volume buttons, power buttons, etc. Continuing advances in small generative models will enable widespread and private adoption of OS-layer agents, as small models can run on device, never sending sensitive user information back to a third party cloud service.

Both Microsoft and Apple have announced early features that use generative AI to operate their devices. At its most recent developer conference, Apple announced Apple Intelligence – small generative models deployed directly on Apple devices with the ability to assist users in a variety of tasks across a device (writing texts, generating images, etc). Similarly, Microsoft recently announced Copilot + PCs, which are PCs with small generative models deployed on device, and Microsoft Recall, a new tool that keeps track of everything users do on Windows machines and allows users to search their usage history. While Recall itself cannot control Windows machines, Recall shows that Microsoft has developed the ability to understand what is happening on a Windows machine at the graphical subsystem level. And, therefore, they have the means to develop models that know how to use computers via their graphical interfaces.

Startups are also working on building AI agent-enabled operating systems, as they are able to build on top of open source operating systems like Android and Linux. For example, Wafer Systems is building an AI agent enabled version of Android that can autonomously operate an Android phone. In one demo, Wafer demonstrates a toy example: say a user receives a text from a friend about going surfing – Wafer will automatically look up the surf report in a surfing app and draft a response to the text.

Now, this example may seem trivial. However, consider if a system like Wafer’s were built into a DoD systems equipped with apps like A-TAK – an Android app widely used by US warfighters. An AI agent-enabled A-TAK device could quickly ingest information being fed to a soldier’s A-TAK and take quick action in the midst of a time sensitive conflict, much faster than a human user would be able to. Warfighters use A-TAK to analyze geospatial information, communicate, and even direct physical assets. Imagine an AI agent-enabled A-TAK automatically analyzing fresh satellite imagery, identifying a key target, ingesting the location of a team mate, dispatching resources to that teammate, generating a report, and sending it to all relevant users.

5. Autonomous Vehicles and Robotics

One of the more ambitious use cases of AI agents will be to actually control physical autonomous vehicles and robotics. In this case, the “tools” an AI agent uses may actually be physical robotics systems and autonomous vehicles rather than software APIs. Already companies like Primordial Labs and Breaker Industries are experimenting with limited agentic use cases for autonomous systems – both companies allow drone operators to direct their systems using natural language speech. Users can simply speak a command (ex: “Take off and fly to 75 feet”) and an AI agent translates that command into drone actions.

In the shorter term, AI agents can be built into automotive infotainment systems like Apple CarPlay and Android Auto to help drivers navigate and control other car functions like air conditioning and music. These agentic systems could monitor vehicle power levels to ensure they have enough power to make it to its end destination and send progress reports back to human overseers. While this use case may seem trivial, imagine the impact this kind of autonomy could have in a high stress combat environment where an AI agent built into a navigation system could quickly ingest data from a myriad of sensors (and potentially even integrate data from teammates’ systems) and generate navigation routes to help warfighters in dangerous, time sensitive situations. The agent could actively plan its route in order to avoid enemies and obstacles detected by onboard and third party sensors.

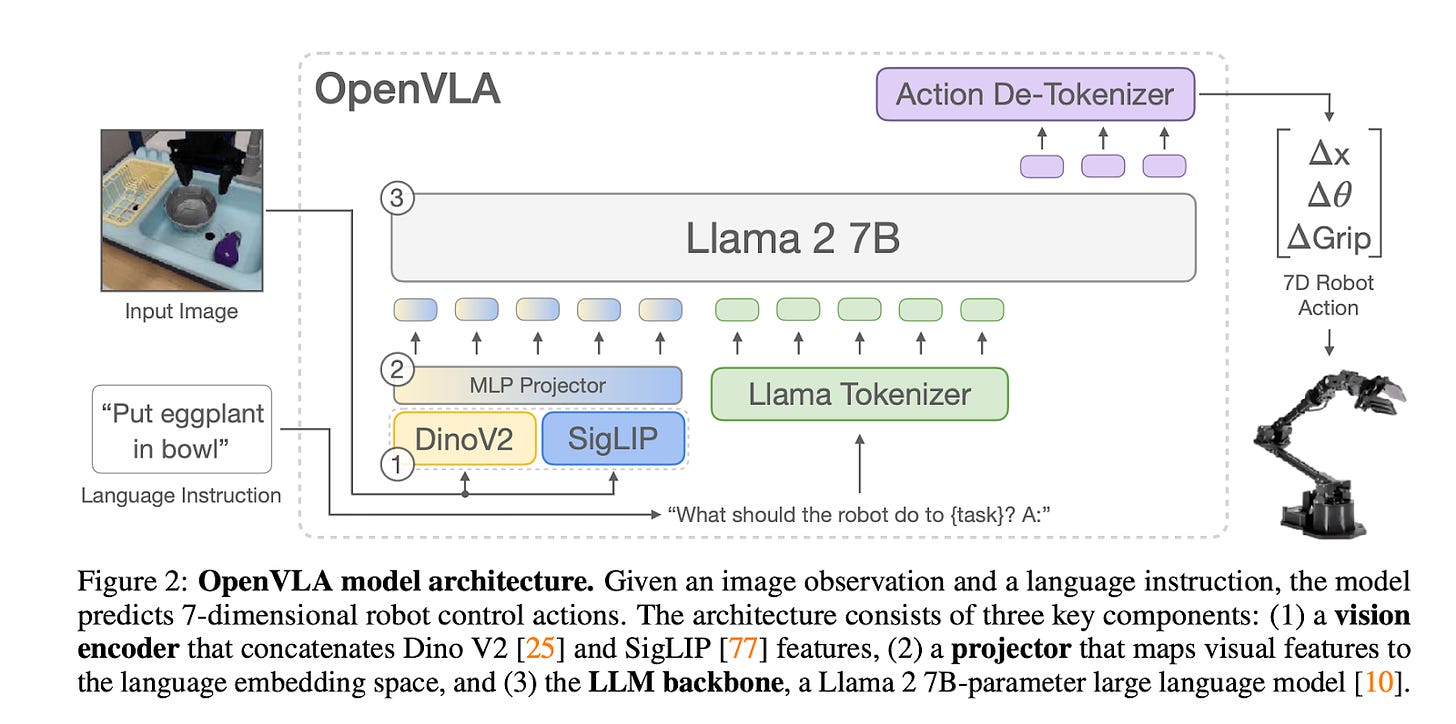

In the longer term, AI agents will gain direct control over autonomous vehicles. Rather than merely operate a navigation, communication, or infotainment system, AI agents will be able to actively control vehicles. Many autonomous vehicle researchers are actively experimenting with “Vision Language Models” (VLMs) and “Vision Language Action Models” (VLAs), language models that can interface with both images and text in order to tackle tasks from visual question answering to image captioning. VLAs can be used to “generate” and execute robotic control actions based on images and text tasks and can quickly learn from human “demonstrations” of tasks. For example, as exemplified in the diagram below, given an image of several objects (in this case, a bowl and an eggplant) and a natural language task (ex: “put the object in the bowl”), a VLA can generate and execute robotic arm control actions that enable the robot arm to carry out the task.

A number of startups including Physical Intelligence and Skild have raised hundreds of millions of dollars to develop “robotics foundation models” – agentic systems that can operate general purpose robotics. Many of these startups ultimately have their eye on developing general purpose humanoid robots which would certainly change the nature of work and warfare.

To reiterate, AI agent technology is still in its infancy. Today, AI agents are good at automating limited tasks like data transformation and entry, but are not yet ready to be deployed in mission critical systems like autonomous vehicle operation. That being said, within the next decade, AI agents will revolutionize the way humans do work as the technology continues to improve. In the event of a potential war in the Indo-Pacific region, the US and its allies will need all the help they can get in order to uphold the cybersecurity of software systems, conduct intelligence analysis, streamline logistics, and operate autonomous vehicles. It is essential for national security stakeholders to explore AI agents’ potential to improve the technology used to defend our democracy.

As always, please reach out if you or anyone you know is building AI for national security, and please let me know your thoughts (or anything I missed) on developing and deploying AI agents for national security customers.

Some of my favorite pieces on agents that inspired this post: Agents Are The Future Of AI. Where Are The Startup Opportunities?; AI Agents & New Age of Software; Moving from AI Assistants to AI Agents; Beyond RPA: How LLMs are ushering in a new era of intelligent process automation

APIs act as “bridges” between different software applications, enabling them to interact and share functions and data in a structured and secure way, without needing to know how the other system works internally.

Today, sockpuppet accounts are manually managed by humans, acting like real malicious users in order to gain access to malicious websites and forums.

I agree with this. I’ve been working on prototypes of systems that can automate the intelligence analysis process at my startup, AIQ, for a while now. We’ve built HYDRA which is an OSINT platform that generates intelligence briefs akin to the PDB for any geographic location. You may want to take a look: https://hydrainsights.online and https://aiq.ng