AR/VR and the Future of National Security

The future of IVAS, Meta's AR glasses, generative 3D models, and more

If I’m being honest, I’m a VR hater. I first experimented with augmented and virtual reality (AR/VR) about a decade ago when my dad brought home an Oculus Rift VR headset. The first few days we had it, my brothers and I fought over who could use the device – we were all excited to play cool immersive VR games like Superhot and Minecraft. However, we quickly got bored of playing with the headset, which was clunky, isolating, and nausea-inducing. I frequently had to run to the restroom for fear of puking while using the headset. As such, the headset has sat on a shelf in my parents’ house, untouched for years.

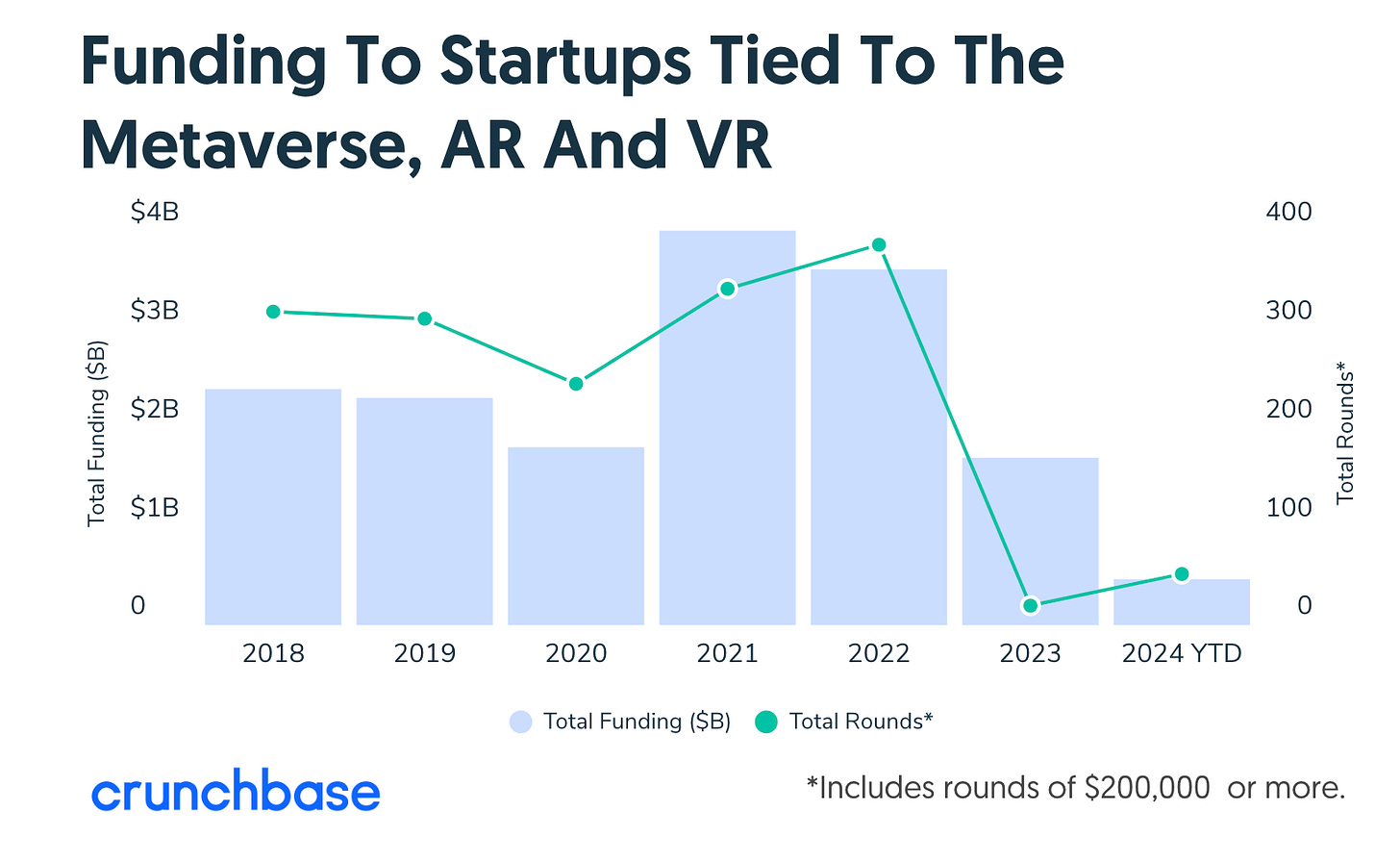

Much like my family's initial excitement over our Oculus headset, venture capital (VC) funding for AR/VR startups has cooled in recent years. VC funding for AR/VR startups (and “the metaverse” as a whole) peaked in 2021 and 2022 but has since declined, largely due to the technology's slower-than-anticipated maturation.

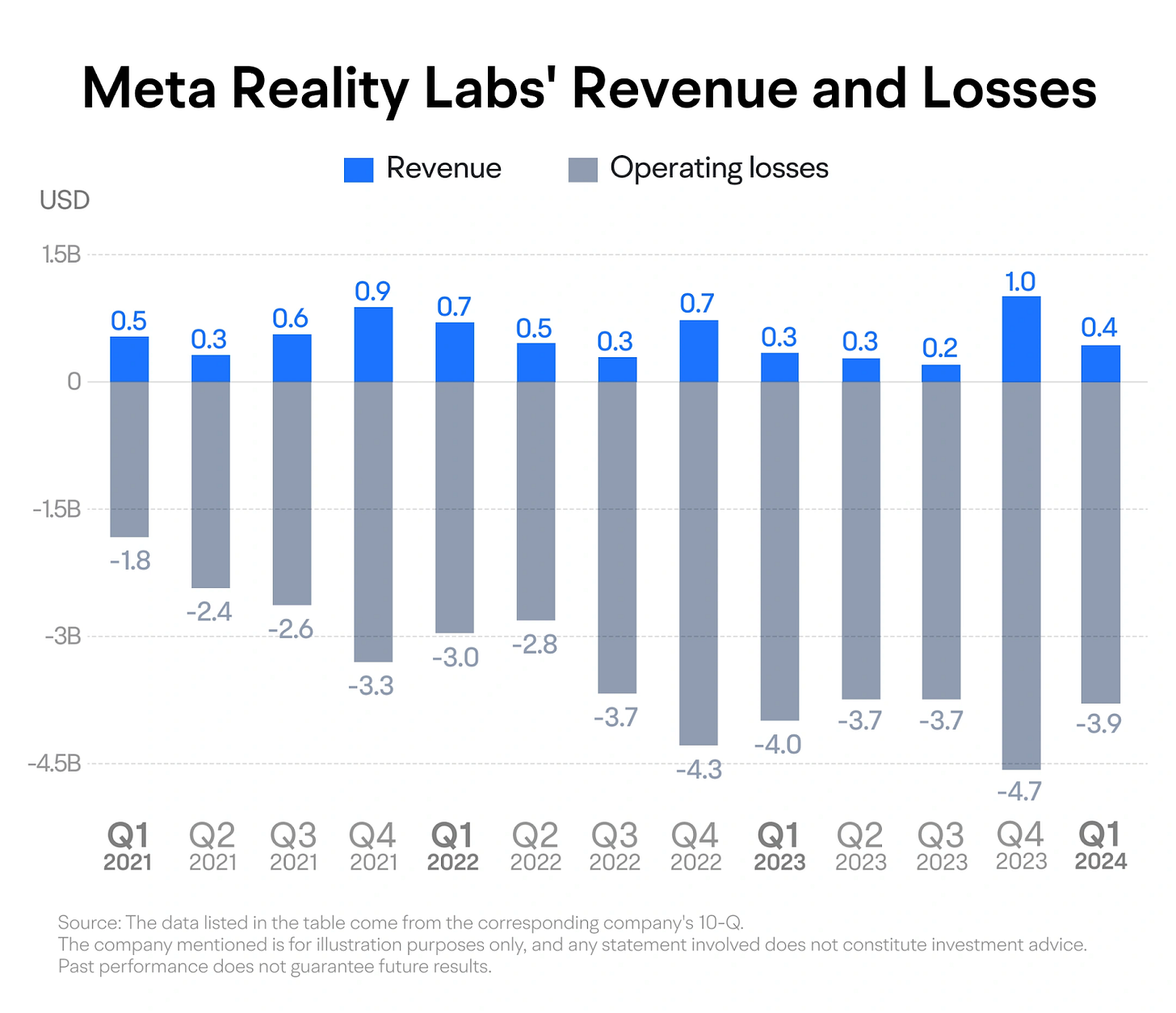

However, despite waning VC interest, AR/VR technology has continued to advance, driven by substantial investments in research and development from tech giants like Meta and Apple. Since Meta’s $2B acquisition of Oculus in 2014, it has spent $63B on Reality Labs, its research division focused on AR/VR technology. Similarly, Apple spent billions developing its Vision Pro, which was released in February 2024.

In addition to corporate investment, the Department of Defense (DoD) continues to invest heavily in AR/VR technology through the Army’s $22B Integrated Visual Augmentation System (IVAS) program of record. New opportunities to apply AR/VR to national security use cases are emerging, as the DoD recently announced its willingness to replace Microsoft as the prime contractor responsible for IVAS.

Given ongoing corporate investments and recent advancements in AR/VR technology, along with new developments in the military's AR/VR programs, I decided to reassess my own dislike of AR/VR and explore whether these innovations could unlock new opportunities for AR/VR to impact national security.

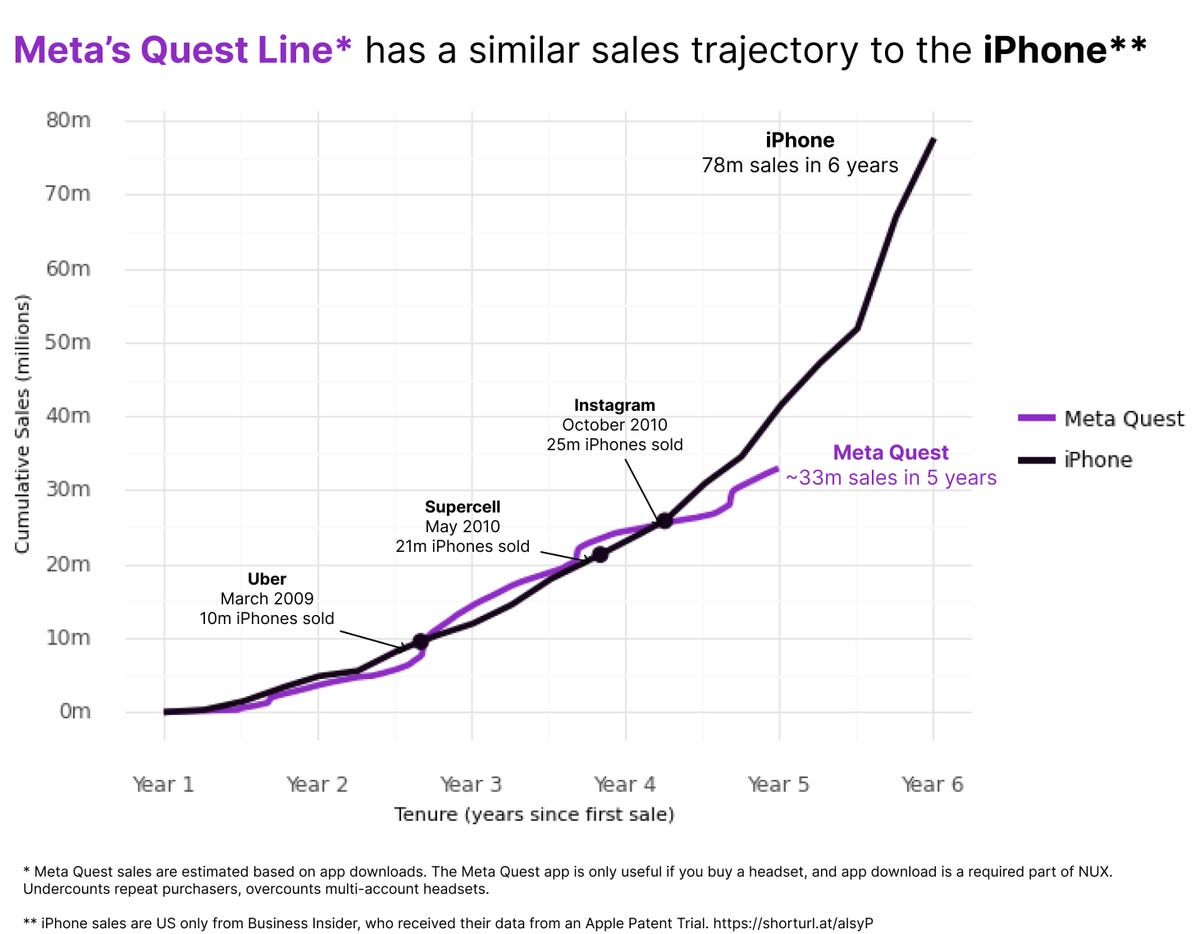

State of AR/VR Technology

Despite the billions being spent on AR/VR, it’s clear that the technology still isn’t quite there for everyday use, let alone for use in mission-critical scenarios such as those in conflict zones. Unlocking practical applications of AR/VR remains both a hardware and a software challenge. However, we are steadily approaching a tipping point – a breakthrough akin to an "iPhone moment" – where advancements in hardware or the emergence of a transformative software application will unlock AR/VR’s full potential, driving mass adoption much like the iPhone did for smartphones.

Advances in AR technology in particular show promise to lead to a breakout product in the near future. For example, Meta’s AI-enabled Ray Ban glasses have proven to be remarkably popular – Meta has sold over 700,000 pairs since October 2023. Although Meta's glasses lack true AR capabilities, as they only offer audio features without a display, they are just the beginning of Meta’s broader strategy to develop more lightweight, AI-powered AR devices.

In September 2024 Meta unveiled a prototype of their Orion AR glasses which are equipped with holographic lenses and Meta AI. Orion’s lenses are clear which allows for a more natural pass-through AR experience compared to other headsets like the Apple Vision Pro, which rely on video-based pass-through technology. Additionally, Orion is much lighter than existing headsets and operates with minimal extra gear, requiring only a bracelet and a compact compute puck that wirelessly connect to the glasses. Orion will not be available to the public for several years (likely around 2027), as the price point is still prohibitively expensive for most consumers. However, many tech experts believe that a lightweight product like Orion could finally unlock everyday use of AR/VR beyond gaming.

Meta’s recent work on their AR glasses also highlights the impact generative AI will have on advancing AR/VR development and adoption. Mark Zuckerberg noted in a recent interview that he did not expect generative AI to gain mainstream adoption before AR/VR, but has found that advancements in generative AI are accelerating what is possible to accomplish with AR/VR.

Generative AI is improving AR/VR in a number of ways. First and foremost, conversational AI chatbots like ChatGPT and Meta AI improve user’s ability to interact with their AR/VR devices, making the experience more seamless and intuitive. For example, Meta’s Ray Ban glasses are equipped with a small generative AI model that runs fully on device capable of answering users' questions. Users, for instance, can ask about the weather, conduct a Google search, or even ask Meta AI about something they see (the glasses have built-in cameras, and Meta AI understands visual as well as textual inputs – one advertisement for Meta’s AR devices shows a user looking at the food in a refrigerator and generating a recipe and shopping list based on what it sees). In the future, Meta’s Ray Ban glasses will also be able to translate from one language into another in real time.

While voice assistants are nothing new (Apple’s Siri and Amazon’s Alexa have been around for over a decade), recent advancements in generative AI have greatly improved voice assistant performance, allowing users to ask a broader range of questions than ever before about multimodal inputs (textual, visual, etc). As AI agent technology improves, AI assistants deployed on AR/VR devices will be able to conduct more and more tasks for users (ordering an Uber, placing an Amazon order, booking plane flights, etc) in a hands free way, with full context of a users’ experience.

Advances in generative AI, as well as the advent of Neural Radiance Fields (NeRFs), an adjacent technology, have also made it much easier to develop and manipulate digital 3D objects and environments for AR/VR use cases. Generative AI models like NVIDIA’s Magic3D and others have proven adept at generating digital 3D objects from natural language prompts. Similarly, NeRFs have made it much easier to convert a real-world object into a digital 3D object using basic camera technology. Combining generative 3D models with NeRFs greatly accelerates the speed at which developers can create, update, and manipulate virtual 3D environments and objects which can then be used by end users for a whole host of use cases including training in simulation, maintenance, gaming, and much more.

Generative 3D models also allow for more dynamic virtual environments. As a user moves through an environment, new elements may be dynamically rendered based on the context of a user’s interactions without needing developers to hard code and design every potential object state and interaction. Developers will also be able to leverage generative AI to more quickly develop large and highly complex 3D environments (imagine being able to navigate a “digital twin” of an entire city or even an entire country!). Even if the first generative render of a 3D object or space isn’t perfect, generative models will still accelerate the development process, as developers will merely need to tweak generated objects rather than creating them all from scratch. Generative 3D models will also allow end users to create their own unique objects and environments in virtual worlds. End users will be able to import their own real world objects into an AR/VR landscape simply by taking a picture of it, or they can use models like Magic3D to dynamically generate unique 3D objects simply by describing those objects using natural language. Language and voice models will also allow for more interesting interactions with non-human avatars which will be particularly powerful for gaming and entertainment use cases.

In order to fully unlock generative AI’s potential to advance AR/VR, more work needs to be done to improve the process of deploying AI models on edge devices like AR/VR headsets. Techniques like model distillation, quantization, and efficient fine tuning will need to continue to improve to develop highly performant optimized small models that can run efficiently on compute constrained edge devices like AR/VR. Startups like EdgeRunner and Nexa AI, as well as large tech companies like Microsoft and Meta, are working to develop highly performant small generative models that run efficiently on edge. Over the last several years, chip companies like NVIDIA and Qualcomm have also acquired a number of startups that make AI models more efficient (NVIDIA acquired OctoAI in September 2024 and Deci AI in May 2024, while Qualcomm acquired Tetra AI in early 2024).

AR/VR devices are also able to leverage compute from external devices that can run more compute intensive workloads. For example, Meta’s Orion glasses wirelessly connect to a small compute “puck” that can handle more complicated AI inference tasks, and in the future, it’s easy to imagine that Apple’s VR products may connect to an iPhone (the next generation of which will be deployed with significant AI compute capabilities) or other Apple device that may handle AI workloads.

It’s likely that we will finally see an “iPhone moment” for AR/VR within the next few years: after years of development and billions of dollars invested, Meta or Apple (or maybe some dark horse player!) will release a hardware device that is lightweight and stylish, equipped with AI models (either on device or on an accompanying device) that unlock real meaningful and dynamic use cases. At that point there will be a huge opportunity for startups to build powerful applications on top of that hardware platform that unlock real value. So what could this kind of breakthrough mean for national security?

National Security Opportunities

There are a number of use cases in which AR/VR could make an impact on national security. The DoD is no stranger to this kind of technology. As one report points out, the DoD “has long incorporated [extended reality] XR into the heads-up and helmet-mounted displays (HUD and HMD, respectively) used by pilots and aircrew. These displays can provide dynamic flight and sensor information intended to increase the users’ situational awareness and improve weapons’ targeting. In the case of the F-35 fighter aircraft’s HMD, inputs from the F-35’s external cameras provide pilots with a 360-degree view of their surroundings; it also displays night vision and thermal imagery—all of which can be overlaid with the technical details (e.g., altitude, speed) of any detected objects.”

Imagine if every warfighter could have access to the kind of situational awareness HUDs and HMDs provide to pilots. Once the underlying technology is perfected, warfighters will be equipped with lightweight AR devices that provide a number of meaningful capabilities, potentially even replacing hand operated devices like ATAK. An AR device could provide a warfighter with information about their location and environment, could help warfighters track the status of their teammates, could enhance warfighters’ senses by overlaying night vision or thermal displays over a visual display, and could even dynamically alert a warfighter to potential threats (ex: perhaps it could highlight a small drone that comes into a warfighter’s field of view or alert a warfighter to an oncoming group of enemy soldiers detected by a teammate). The headset could connect with all other blue team sensors and platforms in the field (other teammates headsets, sensing packages, airplanes, sensor-equipped autonomous systems, etc), providing a warfighter with full situational awareness. It could show a warfighter their location on a map in the corner of their vision, along with their teammates location and the location of any other relevant assets.

An agentic AI assistant on the device could help the warfighter direct other assets (ex: voice controlled small drones) or communicate with teammates. AR and VR goggles could also be used for battlefield management, showing warfighters and commanders a full, dynamic 3D view of a battlefield and all relevant assets on the battlefield. Further, AR glasses could help users with maintenance and repairs. For instance, if a user’s vehicle breaks down, a multimodal agent deployed on an AR headset could combine information from the vehicle’s user manual with visual and other sensor data from the vehicle itself to help guide a user through repairing that vehicle.

Based on my discussions with DoD operators who have firsthand experience using AR/VR headsets in real-world operations, I recognize that the use of this technology on the battlefield remains controversial. It’s clear that the technology is NOT yet ready for mission critical use cases, and many feel that the technology may never be ready. However, there are some applications of AR/VR that are able to provide real value to national security customers in the short term as the technology matures. For instance, a number of companies have emerged to use VR to improve DoD training. Startups like Street Smarts VR work with the DoD to develop realistic 3D training scenarios to allow warfighters to train in scenarios that would otherwise be either too dangerous or too expensive to train in the real world. Many have also found success using VR to virtually “practice” real life missions before conducting them in the field. Startups like Reveal are able to generate realistic 3D environments based on aerial imagery (ex: drone imagery) that can be used for VR training, enabling warfighters to practice missions in realistic 3D environments that accurately represent the environment they will need to navigate during a mission.

The DoD’s main AR/VR initiative is the Integrated Visual Augmentation System (IVAS) program of record. IVAS was first approved in 2018 as an MTA1 rapid prototyping effort, and Microsoft was awarded an OTA2 to develop IVAS. IVAS is based on a militarized version of Microsoft’s HoloLens 2 and is designed to provide soldiers with both night vision and AR/VR capabilities “to increase individual soldier’s situational awareness and ability to detect, identify, and engage the enemy with direct fires. IVAS is intended to enhance collective lethality through the combination of improved communication, mobility, mission command, and marksmanship.”3 In 2021 Microsoft was awarded a rapid fielding contract with a $22B ceiling to deliver the first version of IVAS.

However, the IVAS program has been beset by a number of challenges. The first version of Microsoft’s headset was almost universally disliked by soldiers, as, according to the 2022 DOT&E Annual Report, “the majority of soldiers reported at least one symptom of physical impairment to include disorientation, dizziness, eyestrain, headaches, motion sickness and nausea, neck strain and tunnel vision.” Further, “Soldiers cited IVAS 1.0’s poor low-light performance, display quality, cumbersomeness, poor reliability, inability to distinguish friend from foe, difficulty shooting, physical impairments and limited peripheral vision as reasons for their dissatisfaction.”

Microsoft itself even canceled development of the HoloLens 3, the next generation of its consumer AR/VR headset, and laid off a number of employees dedicated to AR/VR.

In August 2024, the Army suggested that they may replace Microsoft as the prime contractor responsible for “IVAS Next” (the next generation of IVAS), creating an opportunity for primes and startups alike to bring fresh ideas and cutting edge AR/VR technology to the DoD. Soon after the announcement that Microsoft may be replaced, Anduril announced that it would begin collaborating with Microsoft to improve the IVAS platform. In particular, Anduril has integrated its Lattice software into IVAS to enable warfighters to track threats in real time across the battlespace. Lattice uses “sensor fusion, computer vision, edge computing, machine learning, and artificial intelligence (AI) to detect, track, and classify every object of interest in the operator’s surroundings.” Integrating this software into IVAS will allow warfighters to access this data in a hands free and integrated manner. Anduril and Oculus VR founder Palmer Luckey stated, “[IVAS] is my top priority at Anduril, and it has been for some time now. It’s one of the Army’s most critical programs being fielded in the near future, with the goal of getting the right data to the right people at the right time.” Regardless of whether or not Microsoft is replaced, Anduril will likely remain a key player in the development of AR/VR for national security.

As the underlying software and hardware technology needed for AR/VR to be widely adopted improves, it seems we are close to escaping the “trough of disillusionment” of the AR/VR hype cycle and moving towards the “plateau of enlightenment.” As the DoD continues to field and integrate ever more sensor-rich platforms on the battlefield as part of its CJADC2 strategy, it’s crucial that warfighters are able to make the best use of that data to enhance their situational awareness and decision making abilities. If developed and fielded properly, AR/VR will provide a hands free and integrated way for warfighters to leverage this information to win wars. Honestly, I’m not sure when exactly AR/VR’s iPhone moment will occur, but it is coming soon, and the DoD must be ready to act when it is ready. There will be an opportunity at the application layer for startups to contribute to making this technology valuable and usable for warfighters.

Those developing national security applications for AR/VR should initially focus on solving specific problems that provide real value to end users. Many feel that IVAS has struggled because it tried to “boil the ocean” and provide too many capabilities at once. Developing limited but useful AR/VR applications will demonstrate the technology’s utility to end users and spur adoption. Over time, use cases can become more complex as the technology matures and end users become comfortable with the technology. For instance, one application that would provide immediate value is an AR application for blue force tracking. Simply using AR to distinguish allies from enemies could save lives by reducing incidents of friendly fire and simplify battle management for end users.

As always, please let me know your thoughts! I know that this technology in particular is controversial and rapidly changing. And please reach out if you or anybody you know is building a startup at the intersection of national security and technology.

For those interested in learning more about the current and future state of AR/VR technology, I highly recommend checking out following resources, as they inspired me to write this blog post in the first place:

Ben Thompson’s Orion Product Review

Ben Thompson’s Interview with Andrew Bosworth, Meta’s CTO and former Head of Reality Labs

Ben Thompson’s Interview with Hugo Barra, Meta’s former VP of Virtual Reality

Acquired’s Interview with Mark Zuckerberg

MTA = Middle Tier of Acquisition. An MTA is an “acquisition pathway used to rapidly develop fieldable prototypes within an acquisition program to demonstrate new capabilities and/or rapidly field production quantities of systems with proven technologies that require minimal development.”

OTA = Other Transaction Authority. An OTA is a flexible contracting mechanism used by the DoD to streamline the acquisition of technologies and prototypes. OTAs allow for more agile and less regulated agreements than traditional federal contracts, enabling faster collaboration with non-traditional defense contractors, startups, and commercial companies.

DoD Director of Operational Test and Evaluation FY23 Annual Report

Note: The opinions and views expressed in this article are solely my own and do not reflect the views, policies, or position of my employer or any other organization or individual with which I am affiliated.